-

Type:

New Feature

-

Resolution: Unresolved

-

None

-

Affects Version/s: None

-

Component/s: TM Maintenance

-

High

-

Emptyshow more show less

Problem

Currently it is not possible to search multiple TMs at the same time and batch-delete segments from them. This is time-consuming for users.

Solution

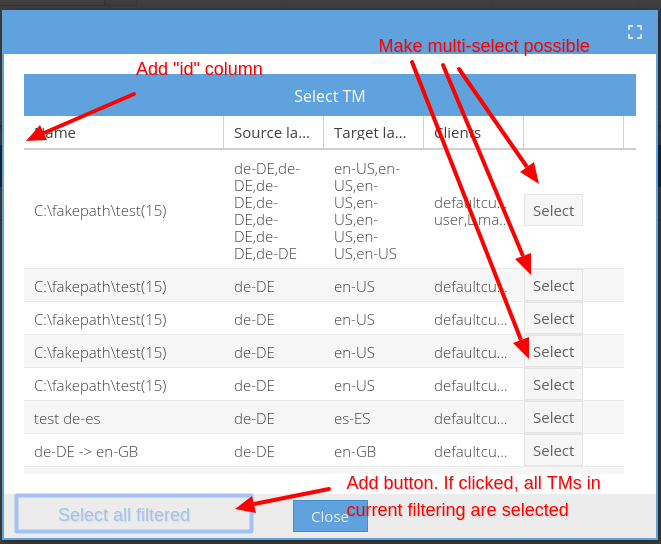

Enable multi-select of TMs

like outlined in the screen shot (and along-side this add an ID-column in the window to filter by ID).

Also add a button, that selects all TMs in the current filtering.

And please make the window much bigger by default

Add a tooltip on Total number and info icon behind it with list of count of found segments for each selected Language resource.

We have to add 2 new columns to result table:

- Language resource id

- Language resource name

Atomic delete

Toss out internal key for atomic delete. Use all segment data instead.

Result table

Remove internal key and segment Id columns.

In cell with actions for segment we have to add 2 new buttons:

- Delete all with same source:

- On click FE source text and list of selected LRs.

- On OK response from back end FE will remove segments with same source text in already loaded list of found segments.

- Other fields do not matter

- Delete all with same source + target:

- On click FE will send source text, target text and list of selected LRs.

- On OK response from back end FE will remove segments with same source and target text in already loaded list of found segments

- Other fields do not matter

Each mass deletion operation (delete all and new 2 buttons) should be blocking operations. On BE we will introduce locks for affected LRs and on attempt to scroll or fetch new data while some delete is still ongoing BE will return 226 IM Used http status. This status will be an indicator that new search (scroll) queries are currently NOT possible. Of course user will be able to work with current state of resulting table - so he/she should be able to schedule delete of new segments in bulks or do atomic deletes.

If search encountered user should be informed:

- for click on search button - info popup

- for scroll - "wait for end processing of deletion request" message at the end of list instead of "Loading new data"

Delete all button will now always send array of selected LRs instead of only one.

We should send queries to BE for search in each LR concurrently.

That can be done by usage of queue (any implementation) to make sure that no more then 6 XHR are active at a time (browser limitation).

So if Search button clicked we make 6 requests at max and if there are more LRs selected then place others in queue. When one of requests is fulfilled - next request in queue is fetched and processed.

If user already starts scrolling - we place pagination requests in queue and process them in sequence. By default (without user scrolling) still up to 2000 segments altogether should be loaded.

Please mind also, that https://jira.translate5.net/browse/TRANSLATE-4290 should still work as outlined there.

Please mind also concurrency of back-end with splitted TMs, which might deliver more results to the front-end, than front-end requested in one request.

Hint for front-end and back-end

-

- It might happen that back-end receives a deletion request for a segment, that was already deleted. Coming from a search in another tab on the same TM. In that case front-end should behave as if segment was simply deleted.

Backend handling

Search will be done in per Language resource as is now and parallelism is solved by front end. We still will do concurrent requests to split TMs of 1 LR. So those will always deliver back 1 to X results in one response, even if the front-end requested only 1 result.

Delete all will start separate workers per language resource to process mass deletion with export->filter->import logic. Number of LRs that can be processed at a time will be configurable by maxParallelWorkers config

Still mass deletion should only use export->filter->import logic, if number of to be deleted results exceeds 1000. Else back-end should still delete them one by one.

New NoReorganiseFuzzySearchService should be created. Most logic of FuzzySearchService is reused except of if reorganise error happened we will simply throw an exception and will not run actual reorganise here.

Handlers of new delete buttons

Delete all with same source

Source text + LR ids will be received as params.

We will schedule worker per language resource to delete segments and respond with OK status right away.

In worker:

We will do fuzzy search with source text and delete all 100 matches one by one from highest internal key to lowest.

If t5memory responds, that segmentId does not match internalKey in delete request, we will redo fuzzy search.

If reorganise error will popup in a process (fuzzy or delete) - we will do export -> filter -> import logic instead of reorganise -> search -> delete

Delete all with same source + target

Source text + target text + LR ids will be received as params.

We will schedule worker per language resource to delete segments and respond with OK status right away.

In worker:

We will do fuzzy search with source text and delete all 100 matches one by one from highest internal key to lowest where target text is exactly same as passed.

If t5memory responds, that delete is unsuccessful we will redo search and try delete founds again until no founds there.

If reorganise error will popup in a process (fuzzy or delete) - we will do export -> filter -> import logic instead of reorganise -> search -> delete

- is blocked by

-

TRANSLATE-5073 Improve fuzzy and concordance search results providing

- Releasable

- relates to

-

TRANSLATE-4290 Make concordance search in editor and TM Maintenance NOT scroll down

- Done

- Wiki Page

-

Wiki Page Loading...